Google is betting big on AI in research, with multisearch aiding end users to uncover what they’re hunting for using a combination of pictures and text.

Google‘s multisearch feature is reinventing regular look for by using AI to assist customers discover what they’re on the lookout for with a mix of pictures and textual content. Multisearch was declared in April 2022, but at the time, was readily available only in beta. The function is component of Google’s force to seriously combine AI into search. Although Google is presently establishing Bard as a ChatGPT rival, it can be also growing the possibilities of Lens, which will soon permit consumers search their monitor and find facts visually, instead than owning to variety out a lookup query.

Multisearch is also a Google Lens aspect that depends mainly on illustrations or photos. It is really suitable for locating out much more facts about one thing that are not able to be properly explained in a text look for. For instance, buyers will be able to add an picture of a eco-friendly espresso mug they observed in a friend’s household, and then form “yellow” to locate purchasing web-sites that provide equivalent, if not similar, mugs in a yellow coloration. Considering the fact that Lens is concerned, customers are far additional most likely to discover the specific similar product when uploading an picture.

How To Use Google’s Multisearch

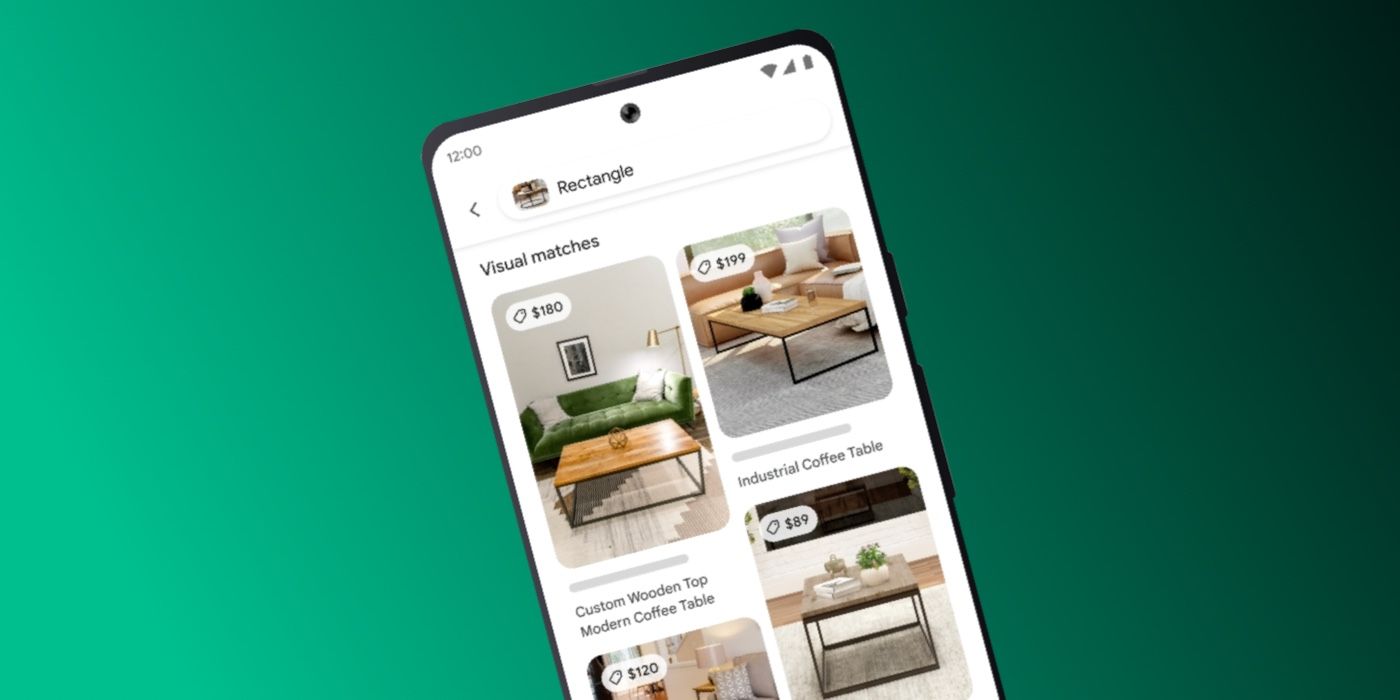

Google offers other illustrations of how multisearch can be employed, and it is sure to make browsing for precise solutions substantially easier. For illustration, consumers can upload a picture of their dining set and then kind “espresso table” to discover a product or service that has a equivalent layout. Multisearch can even be made use of for every day searches. Consumers can add an impression of a basil plant and kind “treatment recommendations,” or a screenshot of nail artwork with the text “tutorial” to get suitable information.

Multisearch isn’t going to just perform when people snap a picture or add an image. It really is also integrated into each and every image in Google research effects. For case in point, when browsing visuals for “minimalist household decor ideas,” users could appear across a lamp they like, but want to see if it is really readily available in a various shape. Buyers will be equipped to tap the Google Lens icon to spotlight the lamp, and then style “round” to see comparable lamps in a round shape. This element isn’t really out there but, but will be rolling out in the subsequent few months.

Google is also bringing the means to lookup domestically to multisearch. This lets people to make buys from neighborhood retailers and tiny corporations. Users will be capable to add a photograph of a solution and type “close to me” to see if it’s readily available nearby. This function is rolling out in English in the U.S., and according to Google, will be obtainable globally in the coming months.

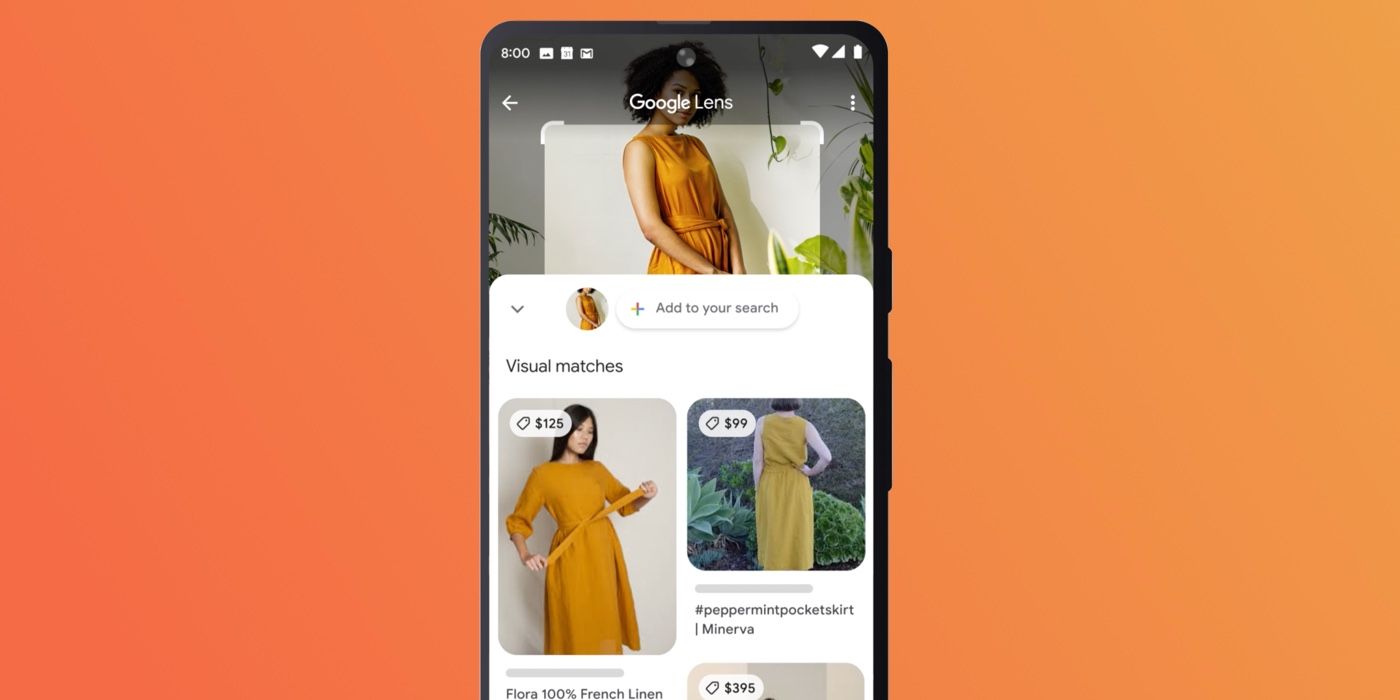

Multisearch with Lens is now accessible in all international locations and languages wherever Google Lens can be accessed. To check out out the attribute, end users will will need the Google app for iOS or Android, creating certain that it’s up-to-date to the most current model. Upcoming, open up the application and faucet on the Google Lens camera icon. Possibly snap a picture utilizing the app’s inbuilt digital camera or add an graphic or screenshot from the phone’s gallery. Up coming, swipe up and tap the “+ Include to your research” button to incorporate textual content. By incorporating visual elements to textual content prompts, Google is earning lookup much more intuitive for smartphone end users about the earth.

Source: Google

link